Operationalize full spectrum AI&LLMs

Powering the world’s most

ambitious AI projects

Key Features

and leverage them to build your custom models.

VESSL Hub

Discover AI models for run and service

Jumpstart with the listed

VESSL Run and Service template YAMLs from VESSL Hub

VESSL Hub

Discover AI models for run and service

Jumpstart with the listed VESSL Run and Service template YAMLs from VESSL Hub

Explore VESSL HubVESSL Run

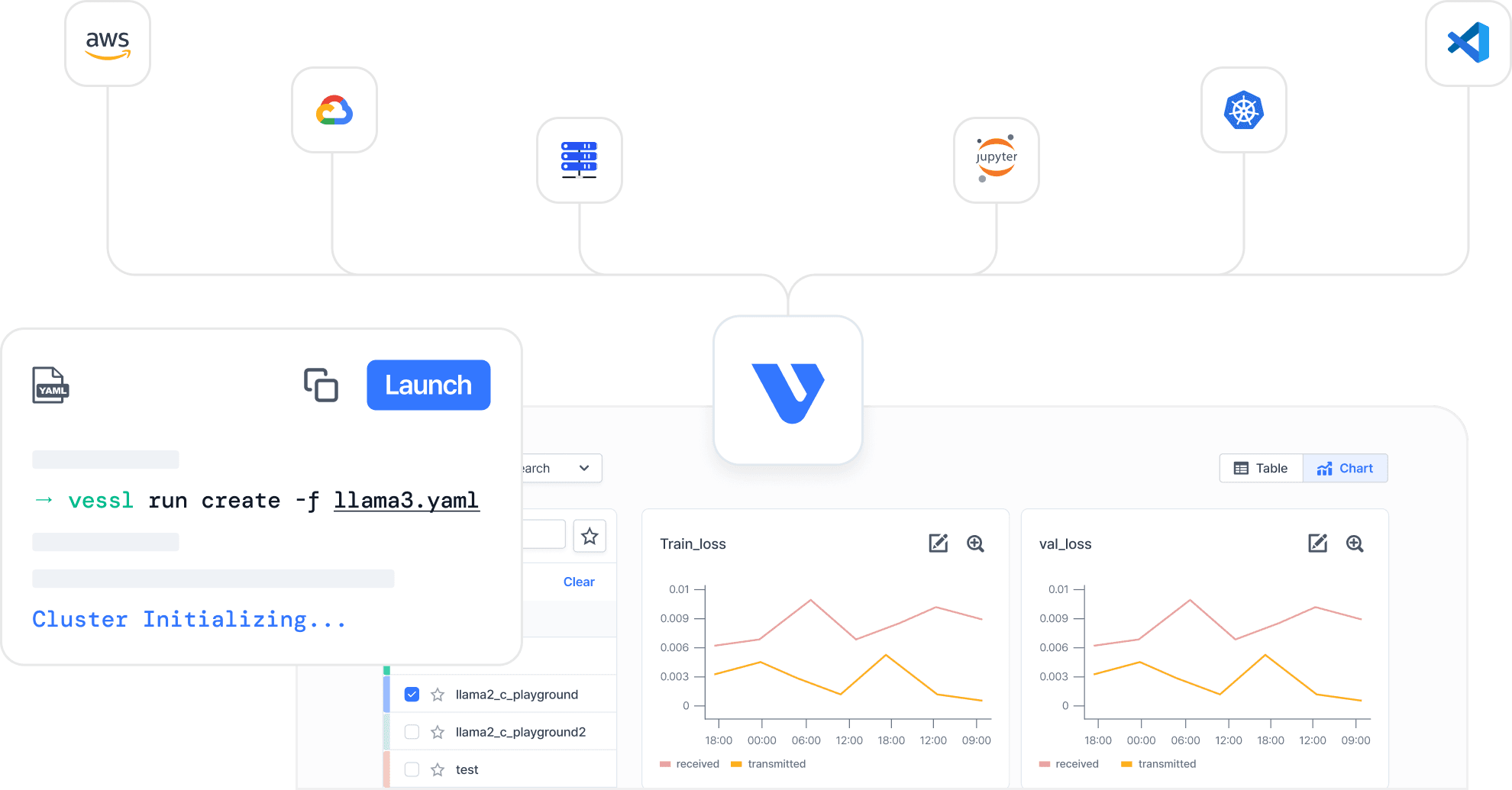

Train models with a single command

Train on multiple clouds

Deploy custom AI & LLMs on any infrastructure in seconds and scale inference with ease.

Ready for large scale workloads

Handle your most demanding tasks with batch job scheduling, only paying with per-second billing.

Maximize cost efficiency

Optimize costs with GPU usage, spot instances, and built-in automatic failover.

One-command configuration

Train with a single command with YAML, simplifying complex infrastructure setups.

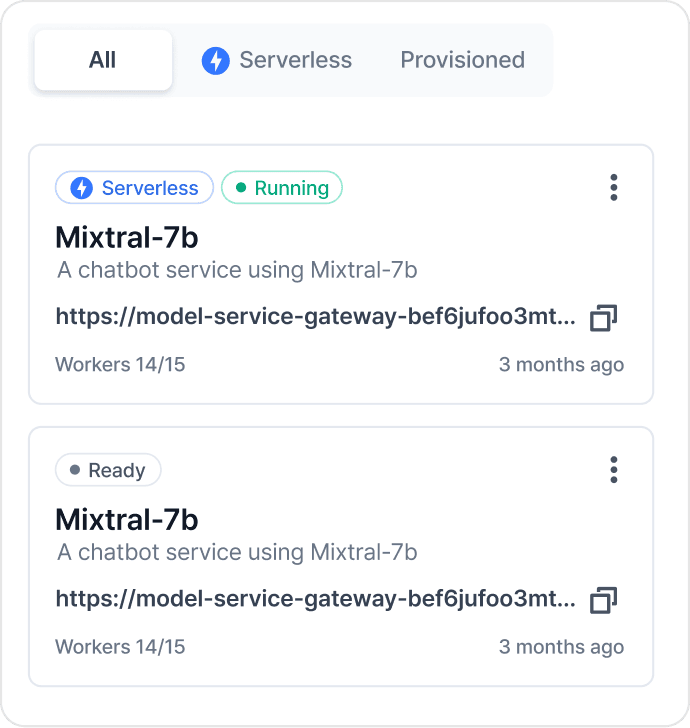

VESSL Service

Deploy your models at scale, anywhere

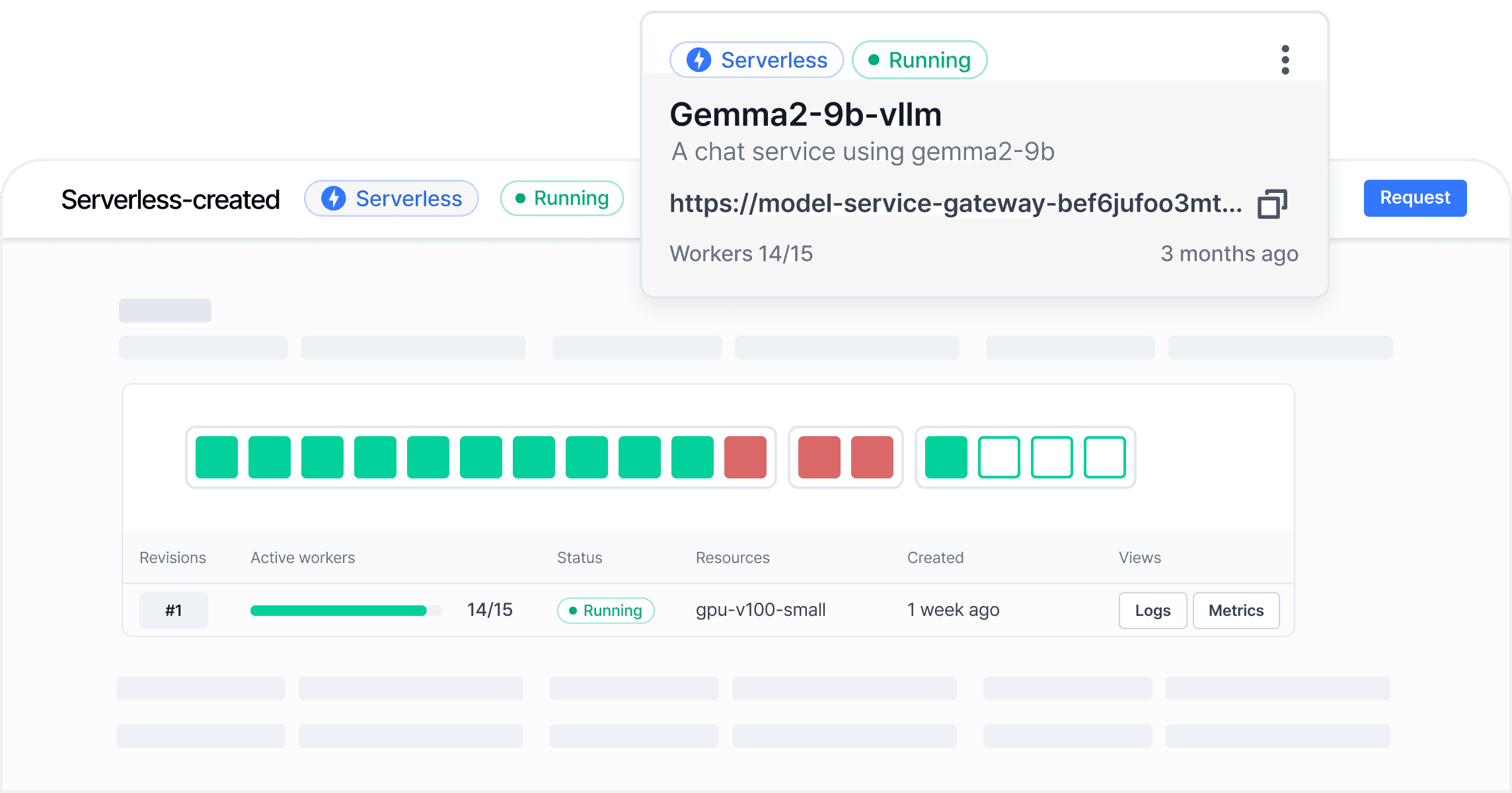

Production ready

Automatically scale up workers during high traffic and scale down to zero during inactivity.

Serverless deployment

Deploy cutting-edge models with persistent endpoints in a serverless environment, optimizing resource usage.

Real-time monitoring

Monitor system and inference metrics in real-time, including worker count, GPU utilization, latency, and throughput.

Traffic management

Efficiently conduct A/B testing by splitting traffic among multiple models for evaluation.

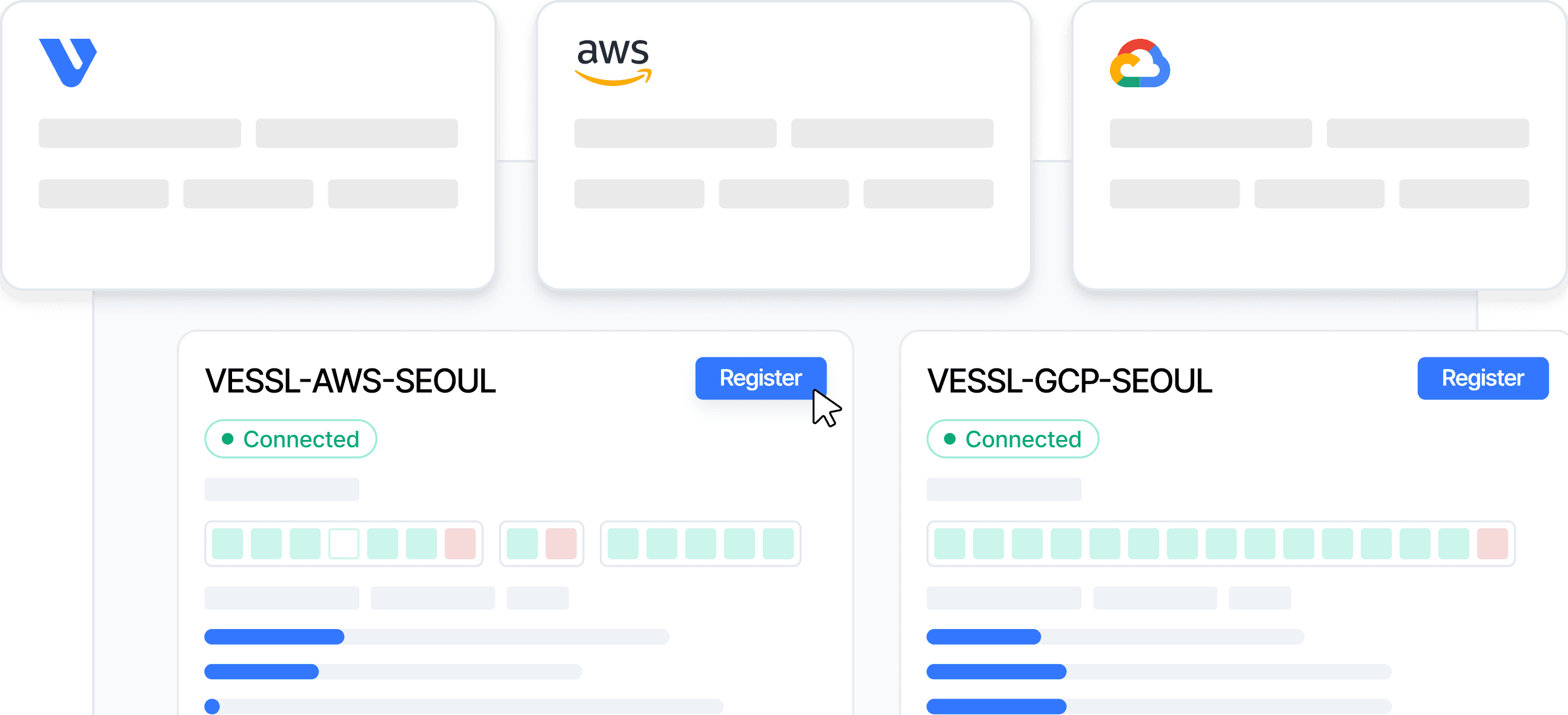

VESSL Cluster

Compute interface for cluster usage

Unified interface

Utilize a consistent web console and command line interface across all the cloud providers and on-premises cluster.

Resource provisioning

Minimize management costs with a real-time administrative cluster dashboard.

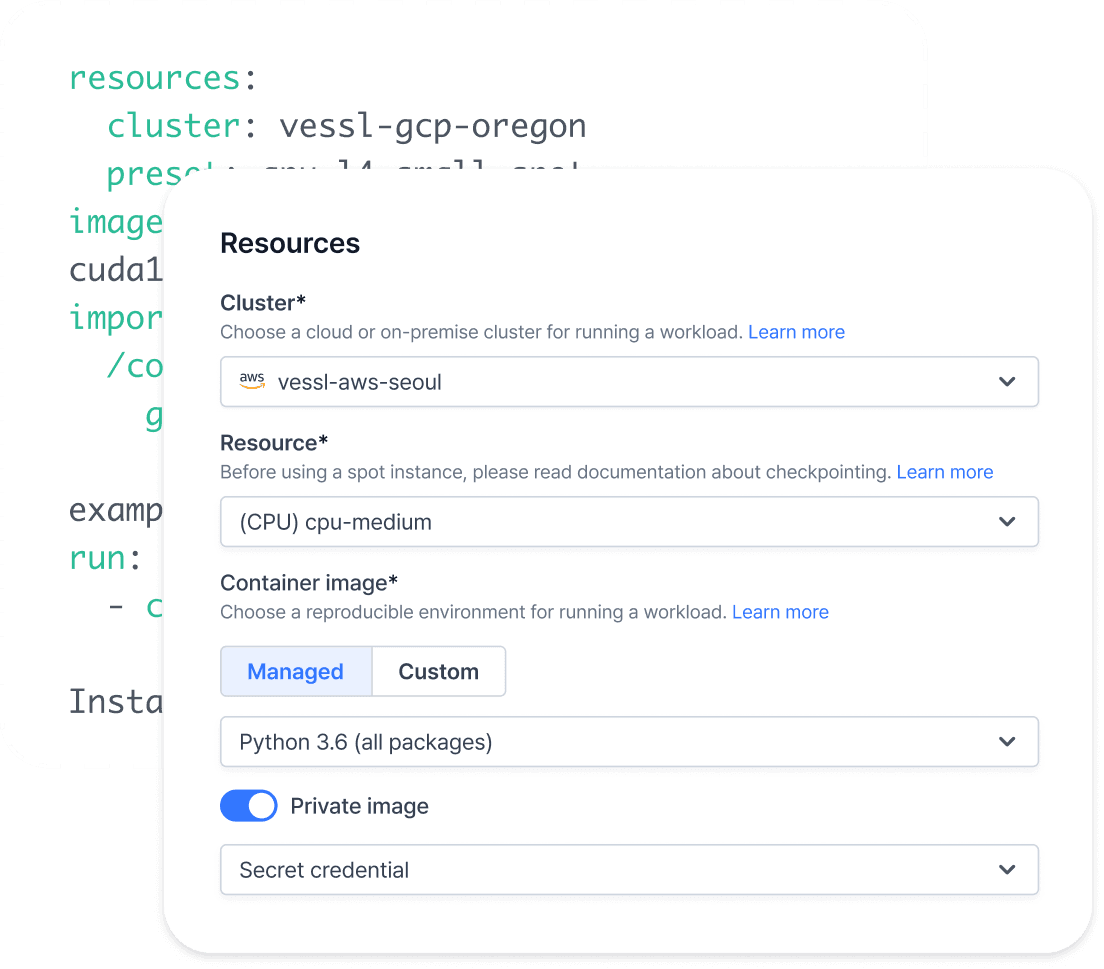

Custom resource specifications

Customize the preset of resource spec, including GPU type and quantity, to meet specific needs.

Priority scheduling

Prioritize critical workloads to maximize the outcomes and efficiency.

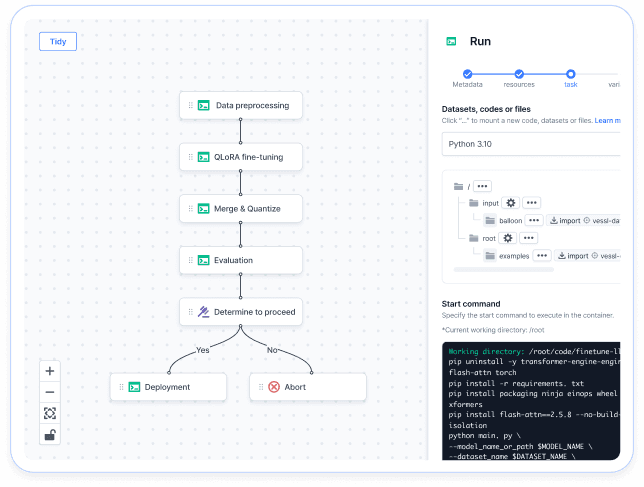

VESSL Pipeline

Automate AI workflows

with end-to-end CI/CD

Tailored for sophisticated machine learning workflows,

simplifying managing cron jobs and ensuring debuggability

VESSL Pipeline

Automate AI workflows

with end-to-end CI/CD

Tailored for sophisticated machine learning workflows, simplifying managing cron jobs and ensuring debuggability

Read our docs

Our Partners

Join the world’s most

ambitious machine learning teams

Provisions 1000+ GPUs to 200+ ML researchers.

See detailProvisions GPU clusters and hosted fastMRI challenge.

See detailOne of the best tools for fine-tuning LLMs.

See detailWe opted for VESSL over AWS Sagemaker.

See detailBest platform to efficiently utilize computing resources.

See detailIntegrates 100TB+ data from multiple regions, streamline data pipelines, and reduced model deployment time from 5 months to a week.

See detailFirst and the only MLOps tools for testing and deploying our generative AI models.

See detail

Unlock a better ML workflow

Get started with VESSL AI

Connect your infrastructures with a single command and use VESSL AI with your favorite tools.