Pick your GPU.

Start building.

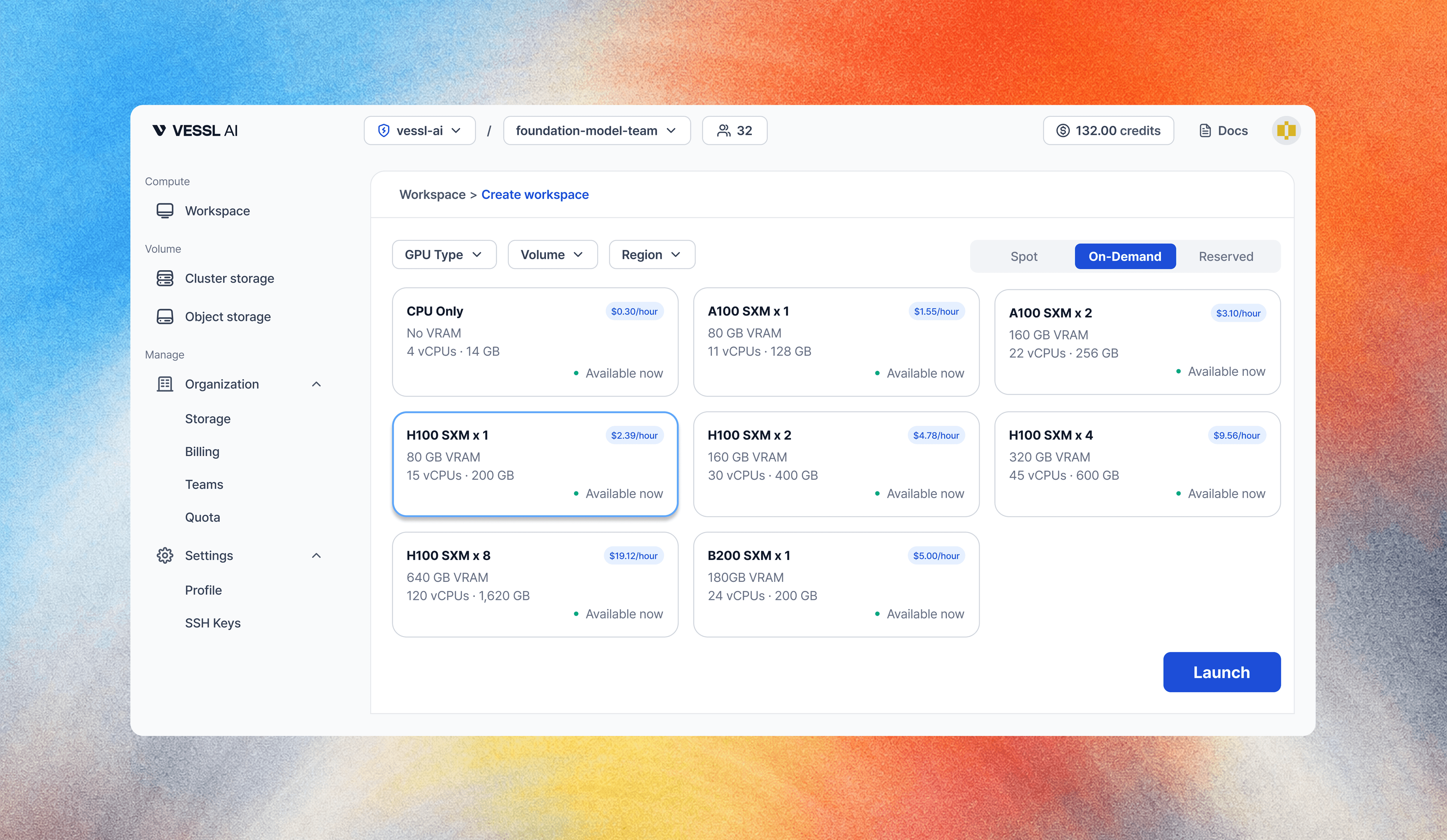

Choose from H100, A100, H200, B200, and more—spin up in minutes, scale on demand, pay only for what you use.

No waitlists. No complexity. Just GPUs.

GPUs, your way.

The simplest path from zero to running AI workloads.

Choose spot, on-demand, or reserved capacity. Pick from H100, A100, H200, B200, and more. Mix and match to fit your workload and budget.

Move fast.

Scale faster.

Built for teams who

can't wait for GPUs.

Stop waiting on cloud quotas. Access H100, A100, H200, B200 across multiple providers through one platform. Scale from prototype to production without re-architecting.

- No quota limits or waitlists

- Multi-cloud failover built-in

- Pay-as-you-go pricing

- Production-ready reliability

Everything you need to

run AI at scale.

Trusted by leading teams

Customers including enterprise, startups, government & academia

Strategic partnerships

Cloud partners

Platform monitoring

Built for real workloads

Web Console

Visual cluster management

CLI

vessl run native workflows

Auto Failover

Seamless provider switching

Multi-Cluster

Unified view across regions

GPU products for every stage

From research to production, pick the reliability level that matches your needs.

Spot

Best-effort, lowest cost

Best for: Research, batch jobs, experimentation

On-Demand

Reliable with failover

Best for: Production workloads

Reserved

Guaranteed capacity

Best for: Mission-critical AI

Start flexible. Scale with confidence.

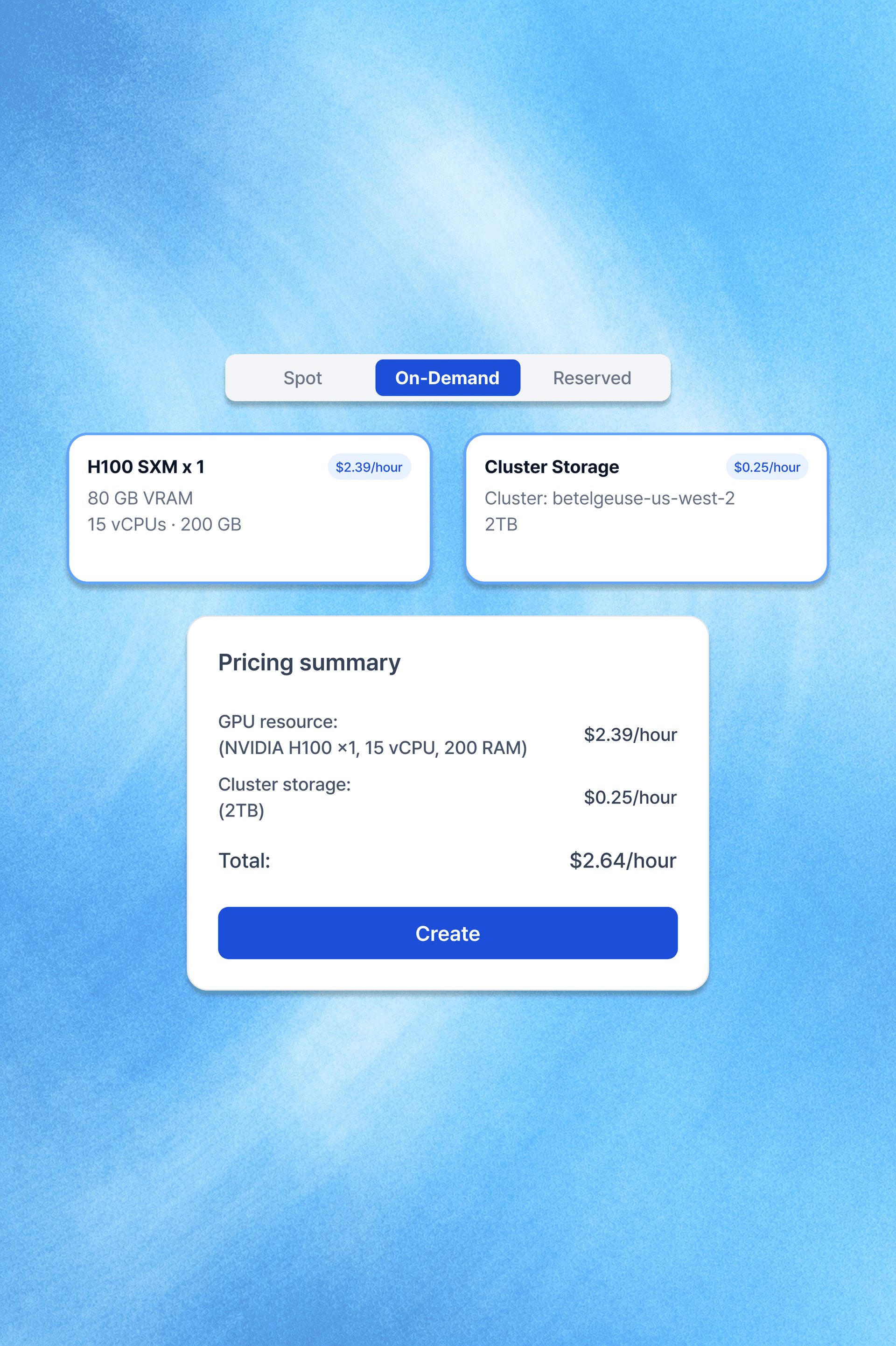

GPU Cloud Pricing

| GPU | VRAM | On-Demand | Spot | Reserved |

|---|---|---|---|---|

| H100 SXM | 80GB | $2.39/hr | Coming Soon | Contact Sales |

| A100 SXM | 80GB | $1.55/hr | Coming Soon | Contact Sales |

| B200 | 192GB | $5.00/hr | Coming Soon | Contact Sales |

Frequently Asked Questions

What's the difference between Spot and On-Demand?

Spot instances use excess capacity at steep discounts but can be preempted. On-Demand provides reliable capacity with automatic failover.

What's the difference between Cluster Storage and Object Storage?

Cluster Storage allows you to share files across multiple workloads and provides faster network performance, ideal for collaborative training jobs. Object Storage is best for storing large datasets and artifacts at a lower cost.

Do you offer academic pricing?

Yes. Research labs and universities can access discounted rates and flexible terms. Contact us for details.

What's included in Reserved pricing?

Reserved plans include guaranteed capacity, dedicated support, and volume discounts. Terms start at 3 months.

Trusted by AI teams worldwide

VESSL meaningfully reduces the time I spend on job wrangling (resource requests, environment quirks, monitoring) and shifts that time back into experiment design and analysis. In particular, reliable compute availability of VESSL allowed me to significantly reduce monitoring efforts with fire-and-forget.

”Joseph Suh, Researcher at Berkeley AI Research (BAIR)

- Time shifted from job wrangling to experiment design

- Monitoring efforts significantly reduced with fire-and-forget

Stop chasing GPUs.

Start shipping AI.

Unified access to GPU capacity across providers. One platform, transparent pricing.

- Start in minutes

- Multi-cloud failover

- High availability built-in

- 24/7 support available